The author of the Bitcoin Energy Consumption Index makes fundamentally flawed assumptions, causing it to demonstrably overestimate the electricity consumption of Bitcoin miners by 1.5× to 2.8×, and likely by 2.2×.

BECI starts with calculating average mining revenues based on a 439-day (variable) moving average of the Bitcoin and Bitcoin Cash prices. Then BECI assumes a fixed 60% share of these revenues are spent on electricity costing $0.05/kWh. That is it. There is nothing sophisticated about his model. His first error is that 60% is not representative of current hardware; real-world data shows the lifetime average percentage is between 6.3% and 38.6%. His second error is that the averaging period is excessively variable and poorly justified: it has increased from 60 and 439 days and changed by twofold his electricity consumption figures.

This motivated me to research and publish my own energy estimate using a model built from real-world data: Electricity consumption of Bitcoin: a market-based and technical analysis estimates 14.19/18.40/27.47 TWh/yr (lower bound/best guess/upper bound) vs. BECI claiming 40.24 TWh/yr as of 11 January 2018.

[This post is a live document. Last update on 21 March 2018.]

- Flaw #1: BECI fails to apply economic theory

- Flaw #2: BECI’s author misread a working paper and arbitrarily decided the ratio of electrical opex to mining revenues was “65%” then “60%”

- Flaw #3: BECI wrongly assumes electricity consumption and Bitcoin price are correlated over a “few weeks”

- Flaw #3.1: BECI’s energy estimate can double/halve based on the author’s frequent changes

- Flaw #4: BECI uses misleading terminology

- Flaw #5: BECI’s supplemental material is also flawed

- Flaw #6: Author misrepresents Morgan Stanley’s reports

- Flaw #7: Fabricated forecast chart

- Flaw #8: “Bitcoin Cash” included in BECI

- Flaw #9: Claim that CBECI supports BECI

- Flaw #10: Same electricity consumption reported for months at a time

- How to fix BECI?

- Conclusion

- Footnotes

Flaw #1: BECI fails to apply economic theory

[Update: I removed flaw #1. BECI’s author addressed it: he used to assume 100% of mining revenues were spent on electricity. However read flaw #2…]

Flaw #2: BECI’s author misread a working paper and arbitrarily decided the ratio of electrical opex to mining revenues was “65%” then “60%”

Initially, BECI’s author assumed that 100% of mining revenues were spent on electricity, leaving miners unable to recover capex, let alone to make profits.

I pointed out that 16nm ASIC miners are spending a small fraction (~15%) of mining revenues on electricity. So in his “final release” he made a first attempt to find the fraction of mining revenues spent on electricity. He found and quoted an SSRN working paper by Tomaso Aste:

“Tomaso Aste (2016) […] implies that the average costs of mining are closer to 55 percent of the available miner income. Aste, however, doesn’t provide many details along with his estimate. To be on the conservative side, the average cost percentage used to calculate the Index is set at 65 percent”

However Aste never implies 55%. Aste writes:

“it can be estimated that, with current hardware, the computation of a billion of hashes consumes, with state-of-the-art technology, between 0.1 to 1 Joule of energy. This implies that currently about a billion Watts are consumed globally every second (1GW/sec [sic]) to produce a valid proof of work for Bitcoin.”

At the time of Aste’s writings in June 2016 the network’s hash rate was 1500 PH/s, therefore “0.1 to 1 Joule” implies 150 MW to 1.5 GW, which is within order of magnitude of 1 GW. The hourly cost of 1 GW is $50k assuming $0.05/kWh, or $8333 per 10-minute Bitcoin block, which Aste compares to the per-block income of $15000 at the time, which is where the 55% comes from: 8333 / 15000 = 55%.

But all these figures are merely orders of magnitude computed from “0.1 to 1 Joule.” I even emailed Aste to erase any doubt; he replied:

“Yes Marc, we do not know which is the average consumption per GH, it depends on the hardware, we can only produce order of magnitude estimates.”

All Aste implies is that the electrical consumption is 150-1500 MW, which corresponds to a per-block electricity cost of $1250-12500, or 8.3-83.3% of the mining income.

BECI’s author misread a working paper by interpreting an order of magnitude as an exact estimate (55%) and arbitrarily picked “65%” when in fact the paper merely implies the percentage is somewhere between 8.3 and 83.3%.

I emailed BECI’s author. He never replied. Some time later he silently removed references to Aste’s paper without letting me know. Around April 2017 I checked his site, saw the references removed, but he still stuck with “65%” with a second attempt to justify his chosen percentage. In his update in Live Energy Consumption Index he assumes:

- Lifetime electrical costs of Antminer S9 = $745.20-1159.20 over 450-700 days

- Only other non-electrical cost is the production cost of the hardware = $500

- 60-70% of costs appear to be spent on electricity, therefore approximately 65% of Bitcoin revenues are spent on electricity

This flawed logic is ridden with multiple errors:

-

The majority of S9 rigs are purchased by customers, and certainly not at the production cost of $500. The launch price in June 2016 was $2100, in May 2017 it was at its lowest at $1058, then it increased, in December 2017 it reached $2320, etc. If we assume the average S9 costs $2000, it changes the percentage to 27-37% (instead of 60-70%).

-

This logic assumes not only miners acquiring S9 rigs under market price at $500, but also making zero profits (hardware capex + electrical opex = mining revenues.) In reality mining is highly profitable. This is why the global hashrate has been shooting up for years! I modeled profitability for all mining rigs released in the last 3 years in the CSV files published in Economics of mining; they show the real-world share of revenues spent on electricity for the S9 is currently 16.2% (lifetime average is 12.3%) as of 11 March 2018.

-

Somewhat less important, but worth mentioning: he wrongly assumes all miners will operate their machines until their very last profitable day (day 450 or 700.) In fact miners often upgrade before reaching the true end-of-life. For example Economics of mining shows it makes sense to upgrade S5 units with S7 units after only 397 days in operation, at a time where the S5 spends only 37.1% of its daily revenues on electricity.

Around April 2017 BECI silently replaced 65% with 60%1 (his third change) but it is insufficient in addressing the above errors. BECI’s author defends his choice by saying “eventually” electricity costs will converge to 60% of revenues. In other words his model is not based on current real-world data/percentages but on his personal future expectations.

Flaw #3: BECI wrongly assumes electricity consumption and Bitcoin price are correlated over a “few weeks”

BECI’s author used to calculate the moving average of the Bitcoin price over a “few weeks.” This average price is used to determine average mining revenues, from which a 60% share is assumed to be spent on electricity.

I pointed out it takes months for miners to to plan, permit, finance, build and launch a typical industrial mining farm. Therefore it was unrealistic for his model to assume electricity consumption trailed price by a “few weeks.”

He later clarified the exact averaging period: it was 60 days, which is perfectly fine in my opinion. However read flaw #3.1…

Flaw #3.1: BECI’s energy estimate can double/halve based on the author’s frequent changes

BECI’s author estimates electricity costs (consumptiom) from 2 parameters:

Average revenues × 60% = electricity costs

As flaw #2 explained, he should have decreased his 60% parameter. But instead, he attempted to (insufficiently) reduce his overestimation by reducing the other parameter, average revenues. He has been calculating the moving average of the Bitcoin price over a variable period of time that keeps getting longer and longer:

Between 2017-05-24 and 2017-05-31 BECI’s author changed the averaging period from 60 days to 120 days. When asked on what day the change was made, he replied vaguely “It may vary per day.”

Another change was made between 2017-05-31 and 2017-06-20; the average is now computed over 150 days.

Another change was made between 2017-07-10 and 2017-07-21; the average is now computed over 200 days. I asked him when was this change made and he replied again vaguely “Let’s say mostly during the first two weeks of June.”

Another change was made between 2017-07-29 and 2017-08-14; the averaging period is no longer disclosed but reverse engineering the numbers indicate it appears to still be 200 days.

As of 2018-01-15 the average is still undisclosed but now appears to be computed over 300 days.

As of 2018-03-12 I was told the average (still undisclosed on the site) is now computed over 439 days.

The trend of Bitcoin’s price has generally been upward, therefore lengthening the averaging period decreases the average price, decreases the average revenues, and decreases his electricity consumption estimate. For example, lengthening the averaging period from 60 to 439 days, as he has done, halves BECI’s estimate.2

BECI’s author effectively took the liberty to change his electricity consumption estimate twofold by tweaking a parameter neither disclosed, nor documented, nor justified.

After asking the author 5 times to clarify his constant changes to the moving average period he eventually replied in a private email it was determined as such:

“Average of (Maximum of (volatility t-1, decay factor × decaying volatility t-1) × reference days)”

“With a decay factor so low that without a new volatility peak it’s practically static. So here’s why I’m saying it’s fine to use a static number for most parts, and I update this on the BECI page when the difference is interesting enough [ed.: the BECI page no longer documents it] > there will always be some fine-tuning mismatches on multiple parameters. The information provided always allows for getting close enough in reproducing, as shown it did. If the fine-tuning is actually relevant it’s a different story, but again, that really depends on the purpose.”

His vague and cryptic explanation answers nothing and raises even more questions:

-

First and foremost, the author provides no evidence to justify his formula’s choice. What is the rationale behind it? What makes 439 days the right averaging period? Why not 200 or 800 days? The author picked an arbitrary value that “felt right” without explanations.

-

The formula is grossly ill-specified. What time period is t-1? What is reference days? What is decay factor? His underspecification makes BECI unreproducible and unverifiable.

-

The author’s vague answers as to when he made changes to the averaging period indicates he does not even properly track them. In an email he told me getting this information would require “to go through thousands of page versions to retrieve this”. These changes should be made public.

Fundamentally, the averaging period is a kludge the author needs as his model estimates electricity consumption based solely on the very volatile Bitcoin price, instead of based on the hashrate which is a real-time indicator of electricity consumption.

Furthermore, the averaging period kludge tries to achieves two incompatible goals:

-

It tries to represent the fact that miners’ investments in hashrate capacity—hence electricity consumption—usually trails Bitcoin price by a few months.

-

It tries to counterbalance BECI’s excessive overestimate that 60% of revenues are spent on electricity. I demonstrated in Electricity consumption of Bitcoin: a market-based and technical analysis that BECI still overestimates consumption by 2.2×, therefore BECI would technically need to change the averaging period from 439 days to approximately 1000 days.2

Herein lies the incompatibility: an averaging period of a few months would overestimate electricity consumption, while an averaging period of 1000 days would be on average correct but would completely smoothes out fluctuations of the real-world hashrate and would therefore sometimes overestimate/underestimate electricity consumption.

BECI’s author’s insistence on not changing his unrealistic 60% parameter forces him to resort to a kludge—the averaging period—that cannot accurately represent real-world electricity consumption.

Flaw #4: BECI uses misleading terminology

A more minor complaint I have about BECI is that it claims to compare Bitcoin to the energy used by countries (electricity + oil + coal + gas…) when in fact it compares it only to their electricity usage. BECI references the International Energy Agency for these numbers, and in my opinion BECI should follow this agency’s example and use proper terminology regarding energy vs electricity. Notably it should title the chart “Electricity consumption by country”, and should say “The entire Bitcoin network now consumes more electricity than a number of countries”. I reported this to the author on 30 December 2016, but he does not seem to want to fix it…

Flaw #5: BECI’s supplemental material is also flawed

On 17 April 2017, the author published supplemental material that continues to make the same mistakes, and introduces new ones.

His upper bound for the total electricity consumption still ignores capex by assuming miners spends 100% of their revenues on electricity (see flaw #1).

His lower bound assumes mining machines are always operated until their very last profitable day. In fact miners routinely decomission older hardware that is still slightly profitable and replace it with more efficient and significantly more profitable hardware.

Flaw #6: Author misrepresents Morgan Stanley’s reports

In January 2018, BECI’s author added a Criticism and Validation section, quoting a report from analysts at Morgan Stanley: Bitcoin ASIC production substantiates electricity use; points to coming jump (source & my review here). BECI’s author ignores part of the report and selectively takes quotes out of context to deceive his readers and make them believe the report validates BECI.

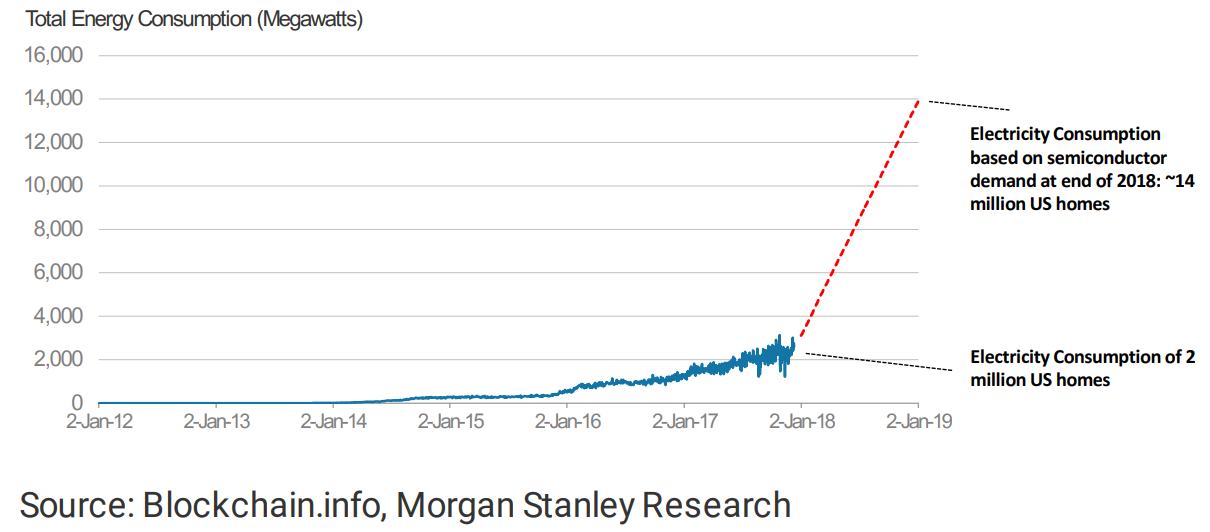

For example the report presents a chart showing 2500 MW, see page 1, exhibit 1:

The chart agrees with my estimate of 1620/2100/3136 MW and disagrees with BECI’s 4600 MW.3 BECI’s author chooses to ignore and not mention the chart.

Here is a the meat of the first section of Morgan Stanley’s report:

The analysts wrote there are “published assumptions [mrb: mine] that Bitcoin is using the equivalent of 2500 megawatts/hour.”

However “economic/pricing-based attempts to estimate electricity consumption [mrb: Digiconomist BECI] have indicated that actual usage levels could be even 50% more than that (~4000 megawatts/hour)”

And they conclude: “as a bottom-up check, we have looked at reports of Bitcoin mining ASIC revenue and orders, rig power consumption and forecasts, and conclude that current use estimates are probably in the right general range.”

Clearly they do not confirm an exact figure but just the “general range” which is 2500 to more than 4000 MW. BECI’s author quotes only the last few words (“current use estimates are probably in the right general range”) to misrepresents the report’s conclusion as validating BECI.

Furthermore, my critic of the Morgan Stanley report uncovered multiple errors which are repeated by BECI’s author:

- “the hash-rate methodology uses a fairly optimistic set of efficiency assumptions”: this is false.

- “the most efficient mining rigs used by Bitmain in its facilities are not yet widely available”: this is false.

- “[Ordos] implies total hourly Bitcoin electricity consumption is well more than 2700 megawatts/hour (23 terawatt hours/year)”: the analysts’ numbers are incorrect, the Ordos mine implies 1690-2020 MW (14.8-17.7 TWh/yr).

- “many data centers around the world have 30 to 40 percent of electricity costs going to cooling [mrb: PUE=1.43-1.67]”: no studies support this, data points show otherwise.

- “may not allow enough for electricity consumption by cooling and networking gear”: electrical overhead is typically small, eg. PUE of 1.11 to 1.33, therefore my upper bound is still likely an accurate “upper bound” as it is unrealistically pessimistic by a lot more than 11 or 33% (it assumes all miners use the least efficient ASICs!)

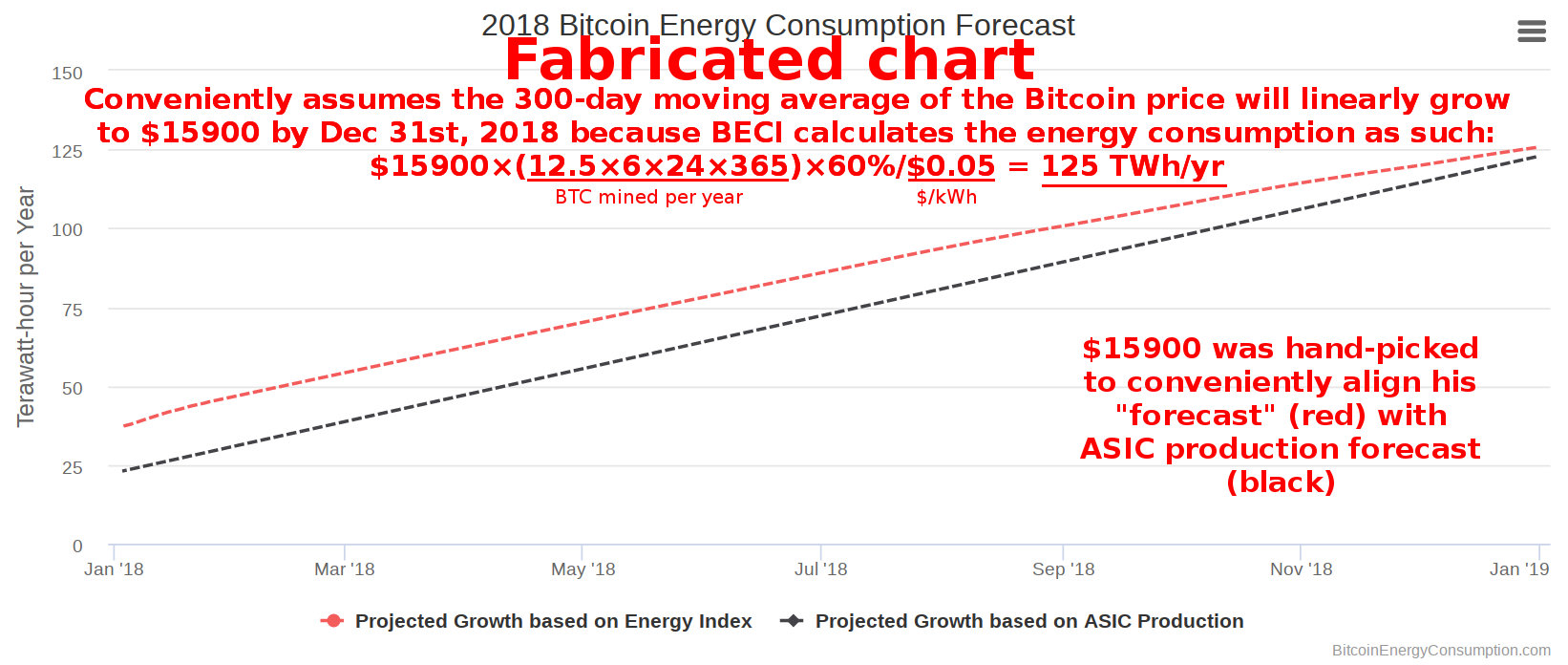

Flaw #7: Fabricated forecast chart

BECI’s author made up an energy forecast chart in Criticism and Validation that is literally fabricated to tend toward the same same numbers as Morgan Stanley’s forecast of 120 TWh/yr by January 2019.

(Note that the Morgan Stanley analysts made various errors, eg. multiplying instead of dividing, their corrected model actually forecasts 60 TWh/yr.)

Remember that BECI’s model assumes 60% of mining revenues are spent on electricity, and revenues are calculated from a moving average of the Bitcoin price. But he cannot forecast the price a year from now, so he picked an arbitrary high price ($15900) that made his chart lines tend toward 120 TWh/yr. There is no other way BECI can “forecast” an energy consumption. Given 2 static parameters (“60%” and “$0.05/kWh”) the only other parameter he can manipulate is the Bitcoin price. Blatant deceitfulness.

Flaw #8: “Bitcoin Cash” included in BECI

The cryptocurrency community makes a clear distinction between Bitcoin and Bitcoin Cash as they are two separate projects, two separate blockchains. However the Bitcoin Energy Consumption Index deceptively includes Bitcoin Cash miners:

“Note that the Index contains the aggregate of Bitcoin and Bitcoin Cash (other forks of the Bitcoin network are not included).”

The author should either not include Bitcoin Cash or should maintain a separate index for it. But, as he has demonstrated so far, he will do anything to inflate his electricity consumption figures.

[Update: On 01 October 2019 he claimed to have removed Bitcoin Cash from the index. Of course BECI being badly flawed means this did not change his electricity consumption figures: 73.121 TWh/year was reported for the day before, the day of, and the day after the change. A change of -3% would have been expected given the BCH hashrate was 3% of the BTC hashrate at this date. Go figure.]

Flaw #9: Claim that CBECI supports BECI

Researchers at Cambridge who also perceived BECI as flawed decided to launch the Cambridge Bitcoin Electricity Consumption Index (CBECI). On, or shortly after, 26 July 2019, the author of BECI claimed that CBECI “failed to produce significantly different estimates” and “the Bitcoin Energy Consumption Index and the Cambridge Bitcoin Electricity Consumption Index are mostly in perfect agreement with each other.”

This is a lie. CBECI and BECI are far from being in “perfect agreement”:

- During the entire year 2018, BECI was on average 51% higher than CBECI.

- From the first available BECI historical figures to the day this claim was made (2017-02-10 to 2019-07-26,) BECI was on average 39% higher than CBECI.

- During 2017-2018, there were 125 days where the difference was 60% or greater.

See cbeci-vs-beci.csv for comparison data. On Twitter the author of BECI further lied and claimed the overall difference was 20% and avoided the discussion when confronted 4 times in a row.

Flaw #10: Same electricity consumption reported for months at a time

BECI reported a constant consumption of 73.12146138 TWh/year for more than 9 months: from 31 July 2018 to 18 November 2018, and from 17 July 2019 to this day (06 January 2020.) Why is BECI stuck at 73.12146138 TWh/year is a mystery. I questioned the author who vaguely explained that sometimes he “adjusts things manually” and refused to provide further details. Obviously this is evidence BECI is not a real-time estimate, but a result of the author’s haphazard and undocumented hacks.

How to fix BECI?

Firstly, BECI should average the Bitcoin price over a fixed period of time (eg. 60 days) instead of frequently adjusting it between 60 and ~439 days.

Secondly, BECI should accurately estimate the percentage of mining revenues spent on electricity instead of hand-picking 60%. We can calculate the real-world percentage for various models of mining rigs. I have done this for all models released between December 2014 and March 2018 in Economics of mining, and found all lifetime average percentages were between 6.3% and 38.6%, with the percentage of the S9 (most popular mining machine making 70-80% of the market share) at 12.3%.

Then we can refine the percentage bounds by estimating the worst-case and best-case market share distribution of mining rig models that would lead to the highest or lowest energy consumption.

However with a market share distribution, the model can be vastly simplified: we get the global average energy efficiency (joule per gigahash), we multiply it with the hashrate, and we obtain the real-time global energy consumption. Simple. This is precisely the approach I followed in Electricity consumption of Bitcoin: a market-based and technical analysis. As of 11 January 2018 my analysis estimates 14.19/18.40/27.47 TWh/yr (lower bound/best guess/upper bound) which is 2.8×/2.2×/1.5× lower than BECI’s claim of 40.24 TWh/yr.

Conclusion

BECI’s author claims his economics-based model is superior to a hashrate/hardware-based model. But the truth is there is no way around the latter. His core parameter (60%) is not validated by evidence and must be determined from the market share of hardware models of mining rigs.

Furthermore, his other main parameter (439-day averaging period) is arbitrary, unjustified, undocumented and undisclosed, making BECI’s figures themselves arbitrary.

As I previously offered, if your are a journalist, analyst, or anyone who wants to write about Bitcoin’s energy consumption, send me a note. I will be happy to review your work and provide feedback. It is professionally unacceptable to publish analyses that are so far off reality like BECI.

Footnotes

-

BECI’s author was unable to remember when he changed the hardcoded “65%” to “60%” (he said it was done “probably around the end of March/start of April [2017].”) ↩

-

As of 14 March 2018, Bitcoin’s 60-day average is $10.3k, the 439-day average is half that at $5.1k, and the 1000-day average is half that at $2.5k. ↩ ↩2

-

Figures as of Januray 3rd or 11th, 2018. ↩