I reviewed the following two research reports published by analysts at Morgan Stanley on the subject of Bitcoin electricity usage:

- Bitcoin ASIC production substantiates electricity use; points to coming jump, by James E Faucette et al., January 3, 2018

- Bitcoin demand > EV demand?, by Nicholas J Ashworth et al., January 10, 2018

The first report estimates current electricity consumption at 2500 MW, agreeing with my own estimate of 1620/2100/3136 MW (lower bound/best guess/upper bound) as of January 11, 2018:

However I spotted a few errors.

- 1. Math error (multiplying instead of dividing)

- 2. PUE of mining farms as low as 1.03-1.33

- 3. Inconsistent PUE math

- 4. Hashrate method makes optimistic and pessimistic assumptions

- 5. Only a fraction of the Ordos farm mines bitcoins

- 6. Antminer S9 dominates the market

- 7. Electricity costs

- 8. Bitmain’s direct sales model: ONE global price

- 9. Transaction fees not accounted for

- Footnotes

1. Math error (multiplying instead of dividing)

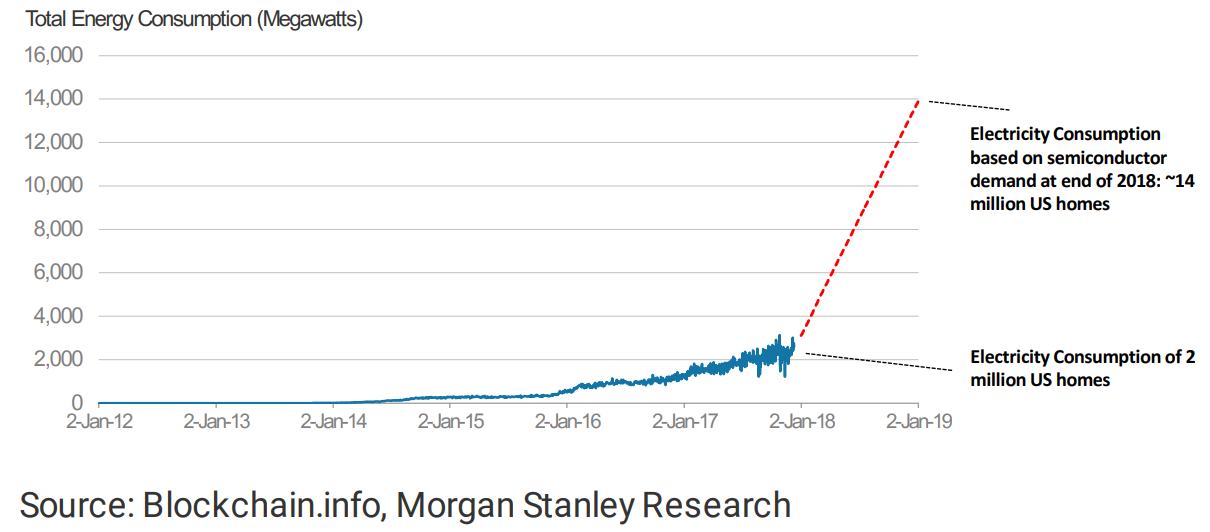

The analysts attempt to forecast future consumption, 12 months from now (ca. January 2019,) and claim it may be “more than 13 500/hour [sic] megawatts.”

Based on TSMC production orders for 15-20k 300mm wafer-starts of Bitcoin ASICs per month, they estimate “up to 5-7.5M new rigs” could be added. They claim to calculate electricity consumption based on 6.5M, but their numbers line up only with the upper bound 7.5M:

7.5M × 1300 (watts) × 1.4 (efficiency improvement) = 13 650 MW

The multiplication by 1.4 is meant to account for new rigs bringing a “40% efficiency improvement” and this is their error: they multiply instead of dividing.1 A given volume of wafers/chips more energy-efficient consume less, not more, per mm² of die area. When correcting this error we arrive at an estimate of 6950 MW, about half their published number (13 500 MW.)

2. PUE of mining farms as low as 1.03-1.33

The Morgan Stanley analysts assume “60% direct electricity usage (i.e. 40% of total electricity consumption is used for non-hashing operations like cooling, network equipment, etc.)” In data center lingo this is called a PUE of 100/60 = 1.67.

However no study supports such terrible PUE values for the mining industry.2 In reality, most mining farms aggressively optimize their PUE:

- Gigawatt Mining builds air-cooled mining farms having a PUE of 1.03-1.05.3

- Bitfury data centers are highly energy-efficient; for example their 40 MW Norway data center has a PUE of 1.05,4 and their CEO emphasized their Iceland data center does not have a high PUE.2

- The well-known Bitmain Ordos mine reportedly has a PUE of either 1.11 or 1.33 (depending on which journalist’s numbers are trusted.)

Google optimized their data center PUEs as low as 1.065 and electricity is not even one of their main costs. So it completely makes sense to find miners, for whom electricity is one of their main costs, to be in the same range.

3. Inconsistent PUE math

According to their future consumption estimate and PUE estimate, the resulting global consumption should be 13500 × 1.67 × 90% utilization = 20 250 MW. But they calculate “nearly 16 000 MW.”

20250 ≠ 16000. The math is inconsistent.

Correcting their math and parameters gives 6950 × 1.11 (or 1.33) × 90% = 6950 (or 8300) MW.

In summary, Morgan Stanley’s first report forecasts the consumption ca. January 2019 will be 13 500-16 000 MW (120-140 TWh/yr annualized) however fixing multiple errors actually forecasts 6950-8300 MW (60-75 TWh/yr annualized).

4. Hashrate method makes optimistic and pessimistic assumptions

The report claims “the hash-rate methodology uses a fairly optimistic set of efficiency assumptions.” This is not true. Well perhaps they refered to other people’s hashrate methodology. But mine, as explained in the introduction, makes optimistic and pessimistic assumptions (miners using either the least or the most efficient ASICs.)

5. Only a fraction of the Ordos farm mines bitcoins

The report continues by attempting to extrapolate the global electricity consumption from the Ordos mine:

- They fail to account for the fact that only 7/8th of the farm mines bitcoins. The other 1/8th mines litecoins.

- The media publishes slightly different power consumption numbers, implying either 29.2 or 35 MW for the Bitcoin rigs (depending on journalists.)

- They build their calculations on a grossly rounded estimate of its hashrate (“4% of ~6M TH/s”), but it can be calculated more exactly as we know there are 21k Bitcoin rigs (~263k TH/s.)

When correcting these errors, the mine’s power consumption scaled to a global hashrate of 15.2M TH/s would imply a global power consumption of either 1690 or 2020 MW (14.8 or 17.7 TWh/yr) depending on journalists. This is significantly less than the analysts’ 2700 MW (23 TWh/yr.)

6. Antminer S9 dominates the market

The report states “the most efficient mining rigs used by Bitmain in its facilities [Antminer S9/T9] are not yet widely available” and imply that if they are not available that the average rig must be another less efficient model.

The analysts conflate market availabily with market share.

Bitmain claimed in mid-2017 they had a 70% market share. Everything points to the fact it is even higher today. The Antminer S9/T9 has been the only Bitcoin mining rig sold by Bitmain for the last 20 months. Batches of tens of thousands sell out in minutes at shop.bitmain.com. Bitmain is buying ~20k 16nm wafers a month and arguably makes up most of the ~10k a month that the Morgan Stanley analysts claim since 3Q17.

~10k wafers = ~270k S9 = ~3.6 EH/s manufactured per month.

That is more than the 1-3 EH/s added monthly to the global hashrate over 3Q17/4Q17 (it takes months to go from wafer production to mining.) Bitmain rigs make up virtually all the hashrate being deployed to this day.

7. Electricity costs

As to Morgan Stanley’s second report, it merely quotes the first report’s flawed prediction of 120-140 TWh/yr ca. January 2019. But other than that it is generally of better quality than the first. My criticism concerns relatively minor points.

In it, the analysts calculate the cost of mining one bitcoin by assuming electriciy costs between 6¢ and 8¢ per kWh. Their source are EIA numbers grossly rounded for entire geographical regions.

Miners do not pay average prices. They choose the less expensive electrical utilities of these regions.

For example where the analysts quote 7.46¢ for Washington State (see their exhibit 5,) a mining farm located in this state, Giga Watt, pays in fact 2.8¢. It is my opinion that the industry average is probably around 5¢.

8. Bitmain’s direct sales model: ONE global price

Another assumption they make when calculating the cost of mining a bitcoin is to assume that outside China an Antminer S9 costs $7000. In reality only individual retail sales reach such high prices on third party sites such as eBay. Large-scale miners representative of the average mining farm, even outside China, all pay the same price: Bitmain’s direct sales price which was $2320 for the batches sold around the time the report was written.

9. Transaction fees not accounted for

Finally, they imply the cost of mining one bitcoin is a “breakeven point” but it is not exactly true. For example, at the time of the report, transaction fees collected by miners averaged more than 600 BTC daily and boosted their global daily revenue by 1.33× (1800 to 2400 BTC,) hence the true breakeven point was 1.33× lower.

Correcting these errors, with an electricity cost of $0.05/kWh, with the same sale price globally, and with the (unusually) high-fee period of December/January, the true breakeven point was $2300, significantly below the analysts’ number ($3000 to $7000.)

Footnotes

-

I would argue that it is incorrect to multiply as well as to divide. Newer chips consume less energy per silicon gate not per mm² of die area. The power consumption per mm² is roughly the same between two different generations of chips, because gates consume less but more can be packed per mm². For example a Radeon R9 390 and a Radeon RX Vega 64 (about the same die area: 438 vs 486 mm²) are manufactured at two very different process nodes (28nm vs 14nm,) yet they have the same ~300 W TDP. Nonetheless I went on with the division to follow the analysts’ argument. ↩

-

A quote from Bitfury CEO Valery Vavilov is often misinterpreted when he offhandedly said in an interview “Many data centers around the world have 30 to 40 percent of electricity costs going to cooling,” which corresponds to a PUE of 1.43 to 1.67. Obviously he was refering to traditional data centers outside the mining industry. In fact he emphasized “This [high PUE] is not an issue in our Iceland data center.” ↩ ↩2

-

Source: personal discussion with CEO Dave Carlson. ↩

-

Bitfury’s 40 MW Mo i Rana data center in Norway has a PUE of 1.05 or lower ↩

-

Source: Efficiency: How we do it ↩